June 2010 Edition

Computer Science Department, University of Cape Town

| MIT Notes Home | Edition Home |

| MSc-IT Study Material June 2010 Edition Computer Science Department, University of Cape Town | MIT Notes Home | Edition Home | |

In this unit we are concerned with evaluation – how can we assess whether some artefact meets some criteria. Specifically in this unit we are interested in how user interfaces can be evaluated in terms of their usability and how well they meet the clients' requirements. Preece (Jenny Preece et al (1995). Human Computer Interaction.) cites four reasons for doing evaluation:

To understand the real world and work out whether designs can be improved to fit the workplace better.

To compare designs – which is best for given situations and users.

To decide if engineering targets have been met – is the design good enough to meet the specification.

To decide if the design meets standards.

The importance of evaluating usability is evidenced by the myriad of consultancies that have sprung up with the development of the world wide web offering to evaluate web sites for clients. These companies typically produce a report at the end of the evaluation telling their clients what they think is wrong with their web site and possibly what could be done to remedy the situation. Needless to say they also offer web site redesign services. There are two main problems with such approaches to evaluation:

The client has no way of knowing how the results of the evaluation were arrived at and so can not make an assessment for themselves of the suitability or coverage of the evaluation – whether it looked at the right kind of usability issues, and whether the approach taken was likely to find a good proportion of the problems.

The evaluation is typically conducted once a lot of time and effort has been put in to designing and building the web site. This may have been wasted if the site needs a complete re-design.

In this unit we look at how evaluation can be performed and so you should gain an insight into resolving the first point – you will be able to perform evaluations and, importantly, understand which evaluations are appropriate for different situations, and what level of coverage can be expected.

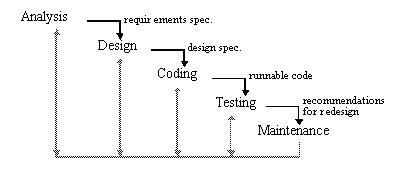

The second of the above points requires us to take a step back and think about when evaluation is appropriate. Conventionally, software development was formalised into a waterfall model (see Unit 3 for more details of software development techniques). In this model (illustrated in the following diagram) there was a strict flow of activity from stage to stage (with later additions of feedback to previous stages illustrated in grey).

At the first stage of this model analysis of tasks (see Unit 8 for details of task analysis techniques), users, and the domain are carried out to produce a requirements specification. This document details what tasks the analyst believes are important, what kinds of users perform such tasks, and details of the domain in which the tasks are to be carried out. This document passes to the following design stage where it is used to inform the design of data structures, system architectures, and user interfaces. These designs are described in a design specification which feeds into the coding stage which in turn produces runnable code. Finally this runnable code can be tested to see if it meets the requirements set out in the requirements and design specification documents. In particular, the functionality (whether the code provides the appropriate support for user actions), usability and efficiency of the code are tested. This may then provide some recommendations for redesign which feed back into previous stages of the process and into the maintenance program once the software has been deployed. In some ways this approach relates to the example briefly introduced above – the web site development company designs and codes their web site and then uses outside consultants to test the site's usability.

It all seems so neat and rational doesn't it? That neat and rational appearance is one of the waterfall model's main weaknesses – real world projects are simply not neat and rational and it is difficult to follow such a simple sequence in any realistically sized project. Furthermore, the simple linear sequence of stages from analysis to testing creates its own problems. For a start, it is often hard to completely analyse requirements at the start of the project, especially user interface requirements – more requirements come to light as the project develops. Then there is the issue of runnable code. No code is developed until quite late in the project development cycle which means that any errors in the analysis and design stages go undetected until late in the project (when the damage has been done you might say). This compounds the problem that testing is not done until the end of the project which means that mistakes in analysis, design, and coding are then costly and difficult to repair.

Using the waterfall approach can be costly in terms of redesign, but, you may ask, surely this is unavoidable. For instance, when you visit the hair dressers for a hair cut, the hair dresser usually finds out what you want first (analysis stage), and then sets about designing and implementing the hair cut i.e. they cut your hair. A fascinating aspect of having your hair cut is that there is hardly any way of knowing what the result will look like until the very end when it may be too late. But imagine if the hair dresser had some means of showing you exactly how you would look at the end of the haircut (rather than some faded photograph of a nice haircut from the 1980s), and moreover, was able to show you how the haircut was progressing with respect to your requirements. You would then have a better idea of what the end product would be, and could even modify the design during its implementation.

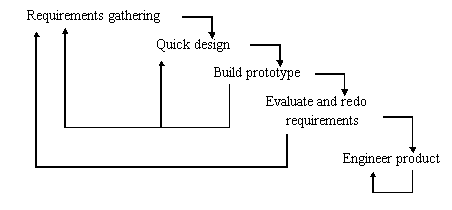

So, rather than evaluating just at the end of the development cycle it makes more sense to evaluate and test designs and products throughout the cycle. One example of this approach is referred to as iterative prototyping (see Unit 3). The following figure illustrates this more iterative and reflective approach to development. As before the approach starts with finding out what the user wants – but at a more general level, what the objectives are etc. These requirements then feed into some quick design process which typically concentrates on the user interface aspects of the system. Design ideas then feed into the development of one or more prototypes of the system – rough and ready systems which exhibit some of the desired characteristics. Even at this early stage feedback may inform the design and requirements stages. The prototypes are then typically evaluated in some way in order to refine the requirements. At this stage not only the customers, but also designers and potential users have their say. This iterative development of the requirements continues until both customer and designer are satisfied that they mutually agree and understand the requirements. As before, these requirements are then used to engineer the product – note that prototypes may also be included in the requirements. There are several advantages to this approach to software development including:

Feedback on the design can be obtained quickly – rather than creating a complete working user interface. This saves both time and money.

It allows greater flexibility in the design process as each design is not necessarily worked up into a full working system. This allows experimentation with different designs and comparison between them.

Problems with the interaction can be identified and fixed before the underlying code is written. Again, this saves time and effort.

The design is kept centred on the user, not the programming – instead of primarily worrying about coding issues it is the usability of the interface that is of prime importance.

We can see in these two approaches two different times at which evaluation can be applied. First off there are formative evaluations which occur when the design is being formed – e.g. at the requirements and design stage. Such evaluations help inform the design and reduce the amount of costly redesign that may be required after the product has been completed. As such evaluation occurs early in the process it typically evaluates designs and prototypes as opposed to finished products. In contrast there are summative evaluations which occur after the product has been completed – they essentially sum up the product and are usually intended to confirm that the finished product meets the agreed requirements. As these evaluations involve finished products the errors found are likely to be costly to repair.

The waterfall model presented at the start of this unit was criticised for being too rigid and not allowing evaluation until the final product had been developed. What advantages do you think the approach could have for software design and development?

A discussion on this activity can be found at the end of the chapter.

The start of this unit discussed two approaches to software development - the waterfall model and iterative prototyping. There are many more than these two approaches - find another approach and contrast it with those presented here. Pay special attention to the role of evaluation in the approaches you find.

A discussion on this activity can be found at the end of the chapter.